Programming Assignment V: CUDA Programming

Parallel Programming by Prof. Yi-Ping You

Due date: 23:59, December 5, Thursday, 2024

The purpose of this assignment is to familiarize yourself with CUDA programming.

Table of contents

Get the source code:

wget https://nycu-sslab.github.io/PP-f24/assignments/HW5/HW5.zip

unzip HW5.zip -d HW5

cd HW5

1. Problem Statement: Paralleling Fractal Generation with CUDA

Following part 2 of HW2, we are going to parallelize fractal generation by using CUDA.

Build and run the code in the HW5 directory of the code base. (Type make KERNEL=kernelX to build with kernelX.cu, and srun ./mandelbrot to run it. srun ./mandelbrot --help displays the usage information.)

The following paragraphs are quoted from part 2 of HW2.

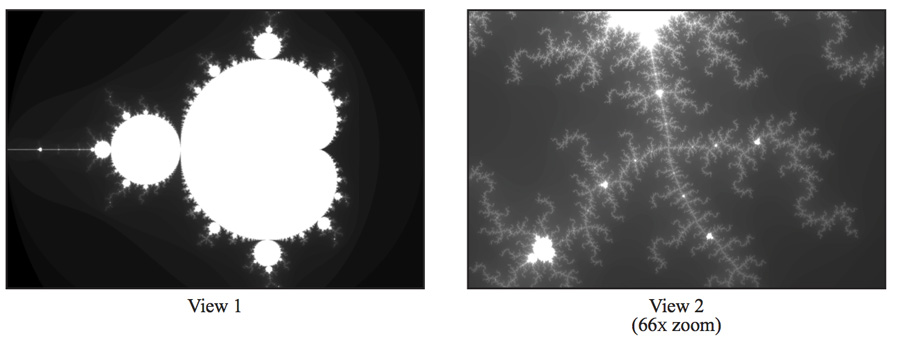

This program produces the image file

mandelbrot-test.ppm, which is a visualization of a famous set of complex numbers called the Mandelbrot set. [Most platforms have a.ppmviewer. For example, to view the resulting images, use tiv command (already installed) to display them on the terminal.]As you can see in the images below, the result is a familiar and beautiful fractal. Each pixel in the image corresponds to a value in the complex plane, and the brightness of each pixel is proportional to the computational cost of determining whether the value is contained in the Mandelbrot set. To get image 2, use the command option

--view 2. You can learn more about the definition of the Mandelbrot set.

Your job is to parallelize the computation of the images using CUDA. A starter code that spawns CUDA threads is provided in function hostFE(), which is located in kernel.cu. This function is the host front-end function that allocates the memory and launches a GPU kernel.

Currently hostFE() does not do any computation and returns immediately. You should add code to hostFE() function and finish mandelKernel() to accomplish this task.

The kernel will be implemented, of course, based on mandel() in mandelbrotSerial.cpp, which is shown below. You may want to customized it for your kernel implementation.

int mandel(float c_re, float c_im, int maxIteration)

{

float z_re = c_re, z_im = c_im;

int i;

for (i = 0; i < maxIteration; ++i)

{

if (z_re * z_re + z_im * z_im > 4.f)

break;

float new_re = z_re * z_re - z_im * z_im;

float new_im = 2.f * z_re * z_im;

z_re = c_re + new_re;

z_im = c_im + new_im;

}

return i;

}

2. Requirements

- You will modify only

kernel.cu, and use it as the template. - You need to implement three approaches to solve the questions:

- Method 1: Each CUDA thread processes one pixel. Use

mallocto allocate the host memory, and usecudaMallocto allocate GPU memory. Name the filekernel1.cu. (Note that you are not allowed to use the image input as the host memory directly) - Method 2: Each CUDA thread processes one pixel. Use

cudaHostAllocto allocate the host memory, and usecudaMallocPitchto allocate GPU memory. Name the filekernel2.cu. - Method 3: Each CUDA thread processes a group of pixels. Use

cudaHostAllocto allocate the host memory, and usecudaMallocPitchto allocate GPU memory. You can try different size of the group. Name the filekernel3.cu.

- Method 1: Each CUDA thread processes one pixel. Use

3. Grading Policy

NO CHEATING!! You will receive no credit if you are found cheating.

Total of 101%:

- Implementation correctness: 81%

kernel1.cu: 27%kernel2.cu: 27%kernel3.cu: 27%- For each kernel implementation,

- (12%) the output (in terms of both views) should be correct for any

maxIterationbetween 256 and 100000, and - (15%) the speedup over the reference implementation should always be greater than 0.6x (kernel1 & kernel2) / 0.3x (kernel3) for

maxIterationbetween 256 and 100000 regarding VIEW 1.

- (12%) the output (in terms of both views) should be correct for any

- Competition: 20%

- Use

kernel4.cuto compete with the reference time. The competition is judged by the running times of your program for generating both views formaxIterationof 100000 with the metric below.- VIEW 1: 10%

- VIEW 2: 10%

- Use

Metric for each view:

\[\begin{cases} 40\% + \frac{T-Y}{T-R} \times 60\%, \text{if} \; Y < T \\\\ 20\%, \text{else if} \; Y < R \times 2 \, \end{cases}\]Where $Y$ and $R$ represent your program’s execution time and the reference time, respectively, and $T = R \times 1.5$.

Programs no slower than 2x the reference time earn 20%. Those under 1.5x earn 40%, plus a percentage based on their runtime relative to the reference time.

4. Evaluation Platform

Your program should be able to run on UNIX-like OS platforms. We will evaluate your programs on the workstations dedicated for this course. You can access these workstations by ssh with the following information.

The workstations are based on Debian 12.6.0 with Intel(R) Core(TM) i5-10500 CPU @ 3.10GHz and Intel(R) Core(TM) i5-7500 CPU @ 3.40GHz processors and GeForce GTX 1060 6GB. g++-12, clang++-11, and CUDA 12.5.1 have been installed.

| Hostname | IP | Port | User Name |

|---|---|---|---|

| hpc1.cs.nycu.edu.tw | 140.113.215.198 | 10001-10005 | {student_id} |

Login example:

ssh <student_id>@hpc1.cs.nycu.edu.tw -p <port>

Please run the provided testing script test_hw5 before submitting your assignment.

Run test_hw5 in a directory that contains your HW5_XXXXXXX.zip file on the workstation. test_hw5 checks if the ZIP hierarchy is correct and runs graders to check your answer, although for reference only. For performance tuning with faster feedback, you can pass the -s (--kernel4-only) option to skip the testing of the first three kernels, assuming that you have already passed them.

5. Submission

All your files should be organized in the following hierarchy and zipped into a .zip file, named HW5_xxxxxxx.zip, where xxxxxxx is your student ID.

Directory structure inside the zipped file:

HW5_xxxxxxx.zip(root)kernel1.cukernel2.cukernel3.cukernel4.cu

Zip the file:

zip HW5_xxxxxxx.zip kernel1.cu kernel2.cu kernel3.cu kernel4.cu

Be sure to upload your zipped file to new E3 e-Campus system by the due date.

You will get NO POINT if your ZIP’s name is wrong or the ZIP hierarchy is incorrect.

You will get a 5-point penalty if you hand out unnecessary files (e.g., obj files, .vscode, .__MACOSX).