Programming Assignment II: Multi-thread Programming

Parallel Programming by Prof. Yi-Ping You

Due date: 23:59, October 16, Thursday, 2025

The purpose of this assignment is to familiarize yourself with Pthread and std::thread programming in C and C++, respectively. You will also gain experience measuring and reasoning about the performance of parallel programs (a challenging, but important, skill you will use throughout this class). This assignment involves only a small amount of programming, but a lot of analysis!

Table of contents

We provide workstations dedicated to this course. You can access the workstations by ssh or use your own environment to complete the assignment.

| Hostname | IP |

|---|---|

| hpclogin[01-03].cs.nycu.edu.tw | 140.113.17.[101-103] |

Login example:

ssh <username>@hpclogin[01-03].cs.nycu.edu.tw

Additionally, we have configured the environment for you, which MUST be activated before running the assignment code using the following command:

module load ppYou can leave the environment by typing

module unload pp.

Please download the assignment code and unzip it:

wget https://nycu-sslab.github.io/PP-f25/assignments/HW2/HW2.zip

unzip HW2.zip

cd HW2

0. Using Workstation

In this assignment, you’ll need to use option -c to allocate physical threads for running your multi-threaded program on the dedicated server. If you don’t specify the number of physical threads, only 1 physical thread will emulate all the threads you use, leading to poor performance.

You can allocate up to 6 threads on the server. Since resources are shared among all students, please allocate only the number of threads you need. Here is an example of how to run a program with 6 physical thread allocated:

run -c 6 -- ./pi.out 6 100000000

You can also profile your multi-threaded program as well. Here is an example of how to profile it using the built-in Bash time command:

run -c 6 -- bash -c "time ./pi.out 6 100000000"

1. Part 1: Parallel Counting PI Using Pthreads

Please create the part1 folder:

mkdir part1

cd part1

1.1 Problem Statement

This is a follow-up assignment from Assignment ZERO. You are asked to turn the serial program into a Pthreads program that uses a Monte Carlo method to estimate PI. Your program should take the total number of tosses and print the estimate of PI. You may want to use long long int for the number of hits in the circle and the number of tosses, since both may be very large to get a reasonable estimation.

Your task is to make the program as fast as possible. You may consider various speedup methods, such as SIMD intrinsics or a faster random number generator. However, you cannot break the following rules: you must implement the Monte Carlo method using Pthreads.

You may want to use a reentrant and thread-safe random number generator.

You are allowed to use third-party libraries in this part, such as pseudorandom number generators or SIMD intrinsics.

1.2 Requirements

- Typing

makein thepart1directory should build the code and produce an executable calledpi.out. pi.outtakes two command-line arguments: the number of threads and the number of tosses. The argument value will not exceed the range ofintandlong long int, respectively.pi.outshould work well for all legal inputs.pi.outshould output (to stdout) the estimated PI value, which is accurate to three decimal places (i.e., 3.141xxx) with at least1e8tosses. The output must be exactly a plain number followed by a newline, with no additional characters. For instance,printf("%lf\n", pi);is acceptable.

Example:

$ make && run -c 6 -- ./pi.out 6 100000000

3.1415926

2. Part 2: Parallel Fractal Generation Using std::thread

Please enter the part2 folder:

cd part2

2.1 Problem Statement

Build and run the code in the part2 directory of the code base. (Type make to build, and run -- ./mandelbrot to run it. run -- ./mandelbrot --help displays the usage information.)

This program produces an image file mandelbrot-serial.ppm, which is a visualization of a famous set of complex numbers called the Mandelbrot set. [Most platforms have a .ppm viewer. For example, you can use the catimg command to view the resulting images on the dedicated server.]

Example:

catimg -H 80 mandelbrot-serial.ppm

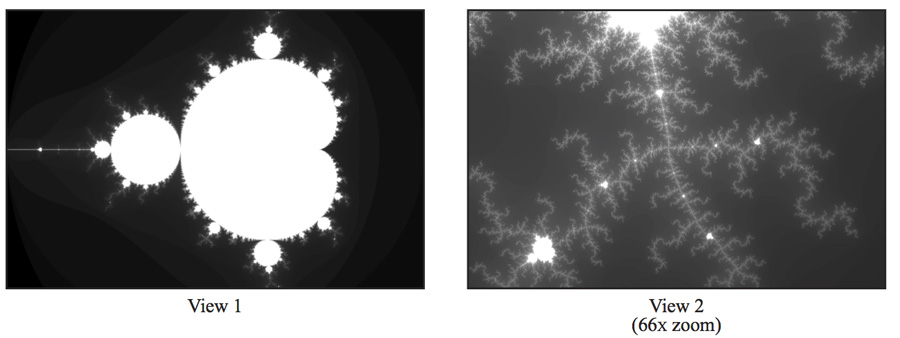

As you can see in the images below, the result is a beautiful fractal. Each pixel corresponds to a value in the complex plane, and the brightness of each pixel is proportional to the computational cost of determining whether the value is contained in the Mandelbrot set. To get image 2, use the command option --view 2. (See function mandelbrot_serial() defined in mandelbrot_serial.cpp). You can learn more about the definition of the Mandelbrot set.

Your job is to parallelize the image computation using std::thread. Starter code that spawns an additional thread is provided in the function mandelbrot_thread() located in mandelbrot_thread.cpp. In this function, the main thread creates an additional thread using the constructor std::thread (function, args…), and waits for the thread to complete by calling join() on the thread object.

Currently, the launched thread returns immediately. You should implement the worker_thread_start() function to accomplish this task. You don’t need any other std::thread API calls in this assignment.

2.2 Requirements

You will need to meet the following requirements and answer the questions (“Q1-Q4”) in a REPORT using HackMD.

- Modify the starter code to parallelize Mandelbrot generation with 2 processors. Specifically, decompose the problem spatially by computing the top half of the image with thread 0 and the bottom half of the image with thread 1.

- Extend your code to use 2, 3, 4, 5, and 6 threads by decomposing the image into blocks for each thread.

- Q1: In your write-up, plot a graph of speedup (compared to the reference sequential implementation) as a function of the number of threads used for VIEW 1. Is the speedup linear to the number of threads used? Hypothesize why this is (or is not) the case. (You may want to plot a graph for VIEW 2 for further insights.

Take a careful look at the 3-thread data point.

- To confirm (or disprove) your hypothesis, measure the amount of time each thread requires to complete its work by inserting timing code at the beginning and the end of

worker_thread_start().- Q2: How do the measurements explain the speedup graph you previously plotted?

- Modify the work decomposition for threads to achieve a speedup of 3-4x on both views (if you’re above 3.5x that’s fine, don’t sweat it). Your solution may NOT use synchronization between threads. We expect you to come up with a single work decomposition policy that works well for any thread counts—hard-coding a solution for each configuration is not allowed!

- Q3: In your write-up, describe your parallelization approach and report the final speedup achieved with 4 threads.

A simple static assignment can achieve this goal without communication/synchronization among threads.

- Now run your improved code with 12 threads using the command:

run -c 6 -- ./mandelbrot -t 12- Q4: Is the performance noticeably better than with 6 threads? Why or why not?

Note that the workstation server provides 6 threads on 6 cores.

3. Grading Policy

NO CHEATING!! You will receive no credit if you are found cheating. Don’t take any chances. 😉

Total of 100%:

- Part 1 (40%):

- Correctness (30%): The requirements should be met, and your parallelized program should run faster than the original (serial) program. Notice that you will receive no credit if one of the two aforementioned conditions fails.

- Scalability (30%): We will evaluate your program with 2, 3, 4 or more threads. Your program is expected to be scalable.

- Performance (40%): Your program will be evaluated on the workstation with:

run -c 4 -- bash -c "time (./pi.out 3 100000000; ./pi.out 4 100000000)". The maximum time limit isT=1.00and the reference time isR_60=0.224, R_100=0.067. See the metrics below for details.

- Part 2 (60%):

- Correctness (30%): Your parallelized program should pass the verification (written in

main.cpp) and run faster than the original (serial) program. Notice that you are not allowed to modify files other thanmandelbrot_thread.cppand you will receive no credit if one of the two aforementioned conditions fails. - Performance (30%): Your program will be evaluated on the workstation with:

run -c 3 -- ./mandelbrot -t 3plusrun -c 4 -- ./mandelbrot -t 4. The maximum time isT = 0.375and the reference time isR_60=0.240, R_100=0.230(considering only the time ofmandelbrot thread). See the metrics below for details. - Questions (40%): Each question contributes 10%. Answers to each question will be classified into one of the four reward tiers: excellent (10%), good (7%), normal (3%), and terrible (0%).

- Correctness (30%): Your parallelized program should pass the verification (written in

Performance metrics (100%):

- Maximum time limit (20%): Your program must complete execution within the threshold

Tseconds.- Reference Time (80%): To earn the first 40%, your program needs to run within

R_60, and earn the remaining if it runs faster thanR_100.

4. Evaluation Platform

Your program should be able to run on UNIX-like OS platforms. We will evaluate your programs on the dedicated workstations.

The workstations are based on Debian 12.9 with Intel(R) Core(TM) i5-10500 CPU @ 3.10GHz processors and GeForce GTX 1060 6GB. g++-12, clang++-11, and CUDA 12.8 have been installed.

5. Submission

All your files should be organized in the following hierarchy and zipped into a .zip file, named HW2_xxxxxxx.zip, where xxxxxxx is your student ID.

Directory structure inside the zipped file:

HW2_xxxxxxx.zip(root)part1(directory)Makefilepi.corpi.cpp- other C/C++ files

part2(directory)mandelbrot_thread.cpp

url.txt

Zip the file:

zip HW2_xxxxxxx.zip part1/* part2/mandelbrot_thread.cpp url.txt

Notice that you just need to provide the URL of your HackMD report in url.txt, and enable the write permission for someone who knows the URL so that TAs can give you feedback directly in your report.

Please run the provided testing script test_hw2 before submitting your assignment.

Run test_hw2 in a directory that contains your HW2_XXXXXXX.zip file on the workstation. test_hw2 checks if the ZIP hierarchy is correct and runs graders to check your answer, although for reference only.

test_hw2 <your_student_id>

- It may take a few minutes to complete the testing.

Please make sure you pass the testing script and upload your zipped file to the new E3 e-Campus system by the due date.

You will get NO POINT if your ZIP’s name is wrong or the ZIP hierarchy is incorrect.

You will get a 5-point penalty if you hand out unnecessary files (e.g., obj files, .vscode, .__MACOSX).

6. References

Here are some resources for you: